How to maximise the potential of point cloud data

Deriving actionable data from point clouds is key — and automation is the answer

Lidar has hit the mainstream in recent years – a fact underlined by Apple’s inclusion of lidar capabilities in its latest hardware. Driving this new adoption are advances in registration algorithms, which are simplifying the process of creating reality capture datasets.

Yet while point cloud data contains a vast amount of spatial detail, it still isn’t being used to its full potential. Generating point clouds is one thing, but extracting actionable data from them is another altogether. Without this critical step, we can’t apply point cloud data to real-world applications.

One way to bridge the gap is by drilling down into individual components within the data to create better, more detailed models with a greater array of use cases. This is where data segmentation or classification comes in — and automating this process is key to unlocking the potential of point cloud data.

Lidar data classification – a definition

Before we go any further, let’s define what we’re talking about. Broadly speaking, data classification is the process of organising unstructured data into distinct categories.

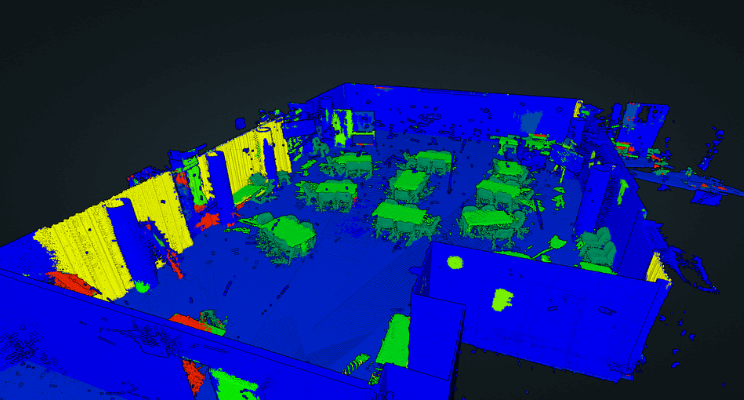

When applied to lidar, data classification assigns meaning to data points by categorising them as distinct objects or object characteristics. In other words, it is the process of differentiating a tree from a building, or a window from a wall.

Let’s look at some examples of lidar data classification in action:

- Construction teams use lidar data classification to automatically detect building components, helping them to track progress, detect risk, and assess material needs

- Urban planners use it to create detailed inventories when developing and maintaining smart-city and digital-twin models

- Environmental scientists use it to monitor large-scale geological trends – even to the level of identifying tree species and estimating density

In all these examples, clusters of lidar points are assigned a class or tag that differentiates them as an object type. Lidar scans can be classified along multiple lines — such as bare earth, water, vegetation, or buildings – and are often defined from the aerial capture domain.

The American Society for Photogrammetry and Remote Sensing (ASPRS) has standardised the classification codes for LAS formats, defining different classes using numeric integer codes in LAS files. While this is a valuable set of standards, the process of generating this data manually is too time-intensive to be used with any real impact.

Manual vs automated classification

To learn about the spatial properties and objects found within point clouds, we have to analyse the data. This can be a time-consuming and repetitive job when performed manually, making it a great use case for automation.

There are four different ways that data classification can be performed. Starting with fully manual, each stage pushes us further forward on the journey to full automation. Today, the best solutions on the market fit somewhere in stage two. Let’s take a closer look.

Stage 1: Manual

Until fairly recently, data classification was performed 100% manually. This involved assessing point clouds and assigning point clusters with certain classifications.

While it would be possible to semi-automate some repeated tasks, for the most part, this was a very repetitive and time-consuming task. Imagine having to manually assign an object like a tree, again and again.

As this process was largely dependent on the human eye, it offered a high level of accuracy, but at a huge cost in terms of time and resources.

Stage 2: Cross-checked teaching

In this stage, we move towards more sophisticated processes, including the application of artificial intelligence (AI) and machine learning (ML). But while algorithms are able to handle the initial classification of objects, humans are still needed to ensure accuracy by cross-checking classifications.

Algorithms at this stage remove the need for cross-checking the actual processes. What’s more, automated classification is able to learn from human input, allowing it to fine-tune itself over time. This results in ever-increasing levels of accuracy and less manual input.

Stage 3: Automated verification

As algorithms improve, fewer classifications need to be cross-checked. At this point, we can assign probabilities for each classification, complete with parameters that automatically generate cross-checks based on certainty levels. Setting different levels of certainty allows users to make a tradeoff between speed and accuracy.

The level of manual cross-checking required varies from project to project. For complex projects featuring a lot of novel data, you’ll need more human input. But like in stage 2, human input at this stage helps the algorithm to become increasingly accurate and efficient.

Stage 4: Fully automated

A fully automated system is the ultimate goal for data classification algorithms. This stage represents a superior, virtually perfect version of stage 3, where the algorithm is so advanced that it can assign error-free classifications, without the need for human intervention.

That said, the need for occasional manual reviews will always remain in any automated lidar classification system, to ensure quality and build trust in the system.

How Vercator is revolutionising point cloud classification

We believe that automation and AI are key to unlocking the potential of point cloud data. We have already revolutionised point cloud processing using cloud-based automation. Now, we aim to simplify the classification process using AI.

With our registration algorithms, you can combine multiple data sets quickly and easily, whether the data comes from mobile scans or traditional point cloud data sets.

Our approach can be summed up by two key features

- Unique algorithm processing: Our algorithms are able to extrapolate directional vectors from data while still retaining its unique and defining characteristics. This allows for smarter, more robust processing.

- Cloud-ready processing: By leveraging cloud-computing power, Vercator is able to process multiple scans simultaneously, cutting processing time significantly. This technique can also accelerate analysis during the classification process.

Together, these capabilities unlock the power and flexibility of scan data, saving you time and money in the process.

Better software leads to better outcomes

With all the talk of automation and self-learning algorithms, you may be wondering how accurate and trustworthy is automated point cloud classification?

Our answer to this question would be ‘trust but verify.’ Automated point cloud classification is still a work in progress, but its capabilities are improving all the time. We’re not far off extending its impact beyond the aerial domain, building highly automated workflows that will change the classification game forever.

The key at this stage is to sift through the hype and choose the right software. The best automation software gives you clarity around certainty levels, alerting you to specific issues that need to be cross-checked.

Ultimately, it all comes back to outcomes. The better the software, the better the outcomes, and the greater the level of trust. By automating the classification process, you’ll boost efficiency and save time and money, allowing you to focus your resources on growth and innovation.

If you’d like to give Vercator a try, you can arrange a demo or trial at vercator.com.

Tags: classification